🤖 What self-driving cars should teach us about generative AI

Even the most promising tech may take longer than expected to have a big impact

Quote of the Issue

“In the long run, optimists shape the future.” - Kevin Kelly

The Essay

🤖 What self-driving cars should teach us about generative AI

Whenever Elon Musk makes some big prediction or sets out some ambitious goal — putting humans on Mars by decade’s end or turning Twitter into a $250 billion payments company — skepticism might seem warranted. After all, he famously forecasted in April 2019, “By the middle of next year, we’ll have over a million Tesla cars on the road with full self-driving hardware.” The autonomous technology would be so sophisticated, Musk promised, a driver “could go to sleep.”

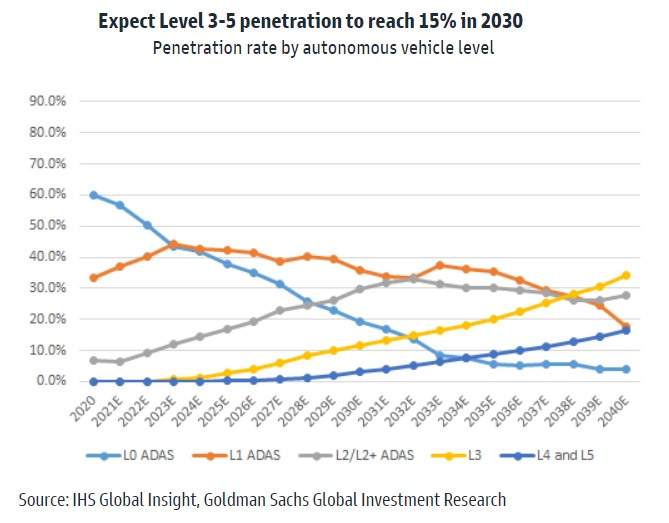

And where is Tesla today? In a February statement addressing the recall of 363,000 Tesla cars — the National Highway Traffic Safety Administration said the automaker’s Full Self-Driving Beta system “may allow the vehicle to act unsafe around intersections” — the company characterized its autonomous technology as a Level Two driving system. As the below graphic shows, there’s a big distance between Level 2 and Level 5, the latter Musk seemed to be describing back in 2019.

Of course, Tesla isn’t the only player here. Waymo operates driverless cars in a ride-hailing service for paying customers in the Phoenix metro area, and is expanding into San Francisco and Los Angeles. Cruise, a subsidiary of General Motors, offers a service in San Francisco. But it’s still the early days despite predictions a few years ago that the autonomy revolution would be well underway by now. In 2016, Lyft CEO John Zimmer predicted self-driving would “all but end” car ownership by 2025.

Here’s a Goldman Sachs overview of self-driving cars from last October:

Will self-driving cars truly take off? The question is whether autonomous vehicles higher than Level 3 are meaningfully worth the effort technologically, socially and profit-wise. On the technology side, advances are needed in both hardware (sensors, etc.) and software. In particular, it is vital that output from AI can be logically explained in order to manage the risk of an accident occurring (XAI, Explainable AI). On the social side, legal frameworks need to be updated, and discussion needs to take place on moral standards, such as the Trolley Problem. These social factors are likely to vary greatly by region. In terms of profitability, the base assumption is that consumers will be willing to pay monthly service charges for the value added by self-driving functions. We estimate Level 3 and higher self-driving cars will account for roughly 15% of sales in 2030 (0% in 2020).

To be clear — whatever the technological and regulatory issues — GS is not saying Level 5 is impossible, but achieving what most people think of as “self driving” isn’t a big part of their outlook, as this chart suggests:

Our societal experience with self-driving technology reminds me of the saying, “Most people overestimate what they can achieve in a year and underestimate what they can achieve in ten years.” It’s a saying that everybody should keep squarely in mind when thinking and forecasting about generative AI, as well. Even with a fundamental technological breakthrough, there always needs to be further (and unpredictable) innovation in the technology itself, complementary innovations, regulatory responses, and efficient business adoption. And while many of these things seem to be happening at warp speed right now with GenAI, especially large language models such as ChatGPT, history suggests the process will take far longer than all the breathless tweeting currently indicates. Again, a great historical summary of this process from Stanford University economist Erik Brynjolfsson:

When American factories first electrified, there was negligible productivity growth for the first 30 years. It was only after the first generation of managers retired and a new generation replaced the old “group drive” organization of machinery, which was optimized for steam engines, with the new “unit drive” approach that enable assembly lines that we saw a doubling of productivity. Today, despite impressive improvements in AI, not to mention many other technologies, productivity growth has actually slowed down, from an average of over 2.4% per year between 1995-2005 to less than 1.3% per year since then. The bottleneck is not the technology – though faster advances certainly wouldn’t hurt – but rather a lack of complementary process innovation, workforce reskilling and business dynamism. Simply plugging in new technologies without changing business organization and workforce skills is like paving the cow paths. It leaves the real benefits largely untapped. However, by making complementary investments, we can speed up productivity growth.

Almost certainly new regulations will be part of that process, as the recent calls for a pause in training large language models suggest. And as those calls show, there will be ideas both good and bad. To avoid creating a regulatory bottleneck, I urge policymakers to take a look at a new analysis by Adam Thierer of R Street, “Getting AI Innovation Culture Right.” Among things, Thierer argues that the U.S. should foster the development of AI, machine learning and robotics by following the policy vision that drove the digital revolution.

It’s a policy vision based on the President Bill Clinton-era Framework for Global Electronic Commerce, which contained five key principles: (1) private sector leadership and market-driven innovation, (2) minimal government regulation and intervention, (3) a predictable, minimalist, consistent and simple legal environment for commerce, (4) recognition of the unique qualities and decentralized nature of the Internet, and (5) facilitation of global electronic commerce and consistent legal framework. These principles can guide technology policy today. just as effectively as they did back then. Thierer concludes:

As policymakers consider governance solutions for AI and computational systems, they should appreciate how a policy paradigm that stacks the deck against innovation by default will get significantly less innovation as a result. Innovation culture is a function of incentives, and policy incentives can influence technological progress both directly and indirectly. Over the last half century, “regulation has clobbered the learning curve” for many important technologies in the United States in a direct way, especially those in the nuclear, nanotech and advanced aviation sectors.106 Society has missed out on many important innovations because of endless foot-dragging or outright opposition to change from special interests, anti-innovation activists and over-zealous bureaucrats.

Micro Reads

▶ Emergent autonomous scientific research capabilities of large language models - Daniil A. Boiko, Robert MacKnight, Gabe Gomes, Arxiv | In this paper, we presented an Intelligent Agent system capable of autonomously designing, planning, and executing complex scientific experiments. Our system demonstrates exceptional reasoning and experimental design capabilities, effectively addressing complex problems and generating high-quality code. However, the development of new machine learning systems and automated methods for conducting scientific experiments raises substantial concerns about the safety and potential dual use consequences, particularly in relation to the proliferation of illicit activities and security threats. By ensuring the ethical and responsible use of these powerful tools, we can continue to explore the vast potential of large language models in advancing scientific research while mitigating the risks associated with their misuse

▶ White House launching $5 billion program to speed coronavirus vaccines - Dan Diamond, WaPo |

▶ The one number that determines how today’s policies will affect our grandchildren - Kelsay Piper, Vox |

▶ Biden administration is trying to figure out how to audit AI - Cat Zakrzewski, WaPo |

▶ Has 200 Years of Science Fiction Prepared Us for AI? - Stephen Mihm, Bloomberg Opinion |

▶ No, Fusion Energy Won’t Be ‘Limitless’ - Gregory Barber, Wired | The results suggest the answer could vary a lot depending on the cost and mix of other energy sources on the decarbonized grid, like renewables, nuclear fission, or natural gas plants outfitted with carbon capture devices. In most scenarios, fusion appears likely to end up in a niche much like that held by good ol’ nuclear fission today, albeit without the same safety and waste headaches. Both are essentially gargantuan systems that use a lot of specialized equipment to extract energy from atoms so it can boil water and drive steam turbines, meaning high up-front costs. But while the electricity they provide may be more expensive than that from renewables like solar, that electricity is clean and reliable regardless of time of day or weather.

So, on those terms, can fusion compete? The point of the study wasn’t to estimate costs for an individual reactor. But the good news is that Schwartz was able to find at least one design that could produce energy for the right price: the Aries-AT, a relatively detailed model of a fusion power plant outlined by physicists at UC San Diego in the early 2000s. It’s just one point of comparison, Schwartz cautions, and other fusion plants may very well have different cost profiles, or fit into the grid differently depending on how they’re used. Plus, geography will matter. On the East Coast of the US, for example, where renewable energy resources are limited and transmission is constrained, the modeling suggested that fusion could be useful at higher price points than it is in the West. Overall, it’s fair to envision a future in which fusion becomes part of the US grid's “varied energy diet,” he says.

▶ Biden Administration Weighs Possible Rules for AI Tools Like ChatGPT - Ryan Tracy, WSJ |

▶ Google Tells AI Agents to Behave Like 'Believable Humans' to Create 'Artificial Society' - Chloe Xiang, Vice |

▶ Can Intelligence Be Separated From the Body? - Oliver Whang, NYT | But some A.I. researchers say that the technology won’t reach true intelligence, or true understanding of the world, until it is paired with a body that can perceive, react to and feel around its environment. For them, talk of disembodied intelligent minds is misguided — even dangerous. A.I. that is unable to explore the world and learn its limits, in the ways that children figure out what they can and can’t do, could make life-threatening mistakes and pursue its goals at the risk of human welfare. “The body, in a very simple way, is the foundation for intelligent and cautious action,” said Joshua Bongard, a roboticist at the University of Vermont. “As far as I can see, this is the only path to safe A.I.”

▶ This economist won every bet he made on the future. Then he tested ChatGPT - Mathew Kantor, Guardian |

▶ For a greener world, cut red tape - Alex Trembath and Seaver Wang, Boston Globe | While NEPA was intended to — and did — prevent deployment of environmentally damaging infrastructure in the past, today the law prevents more renewable energy projects than oil and gas projects. State-level NEPA copycats, like the notoriously counterproductive California Environmental Quality Act, have been invoked to block bike lanes, a bullet train, and increased student enrollment at the University of California, Berkeley. … The Nuclear Regulatory Commission provides a damning example of how regulatory oversight can be weaponized to stall carbon-reduction projects. Until the new Vogtle-3 reactor in Georgia produced its first electrons for the power grid in March, the Nuclear Regulatory Commission had gone nearly 50 years without ever licensing a reactor technology that went on to produce electricity commercially. Now, the NRC appears ready to impose regulations on a new generation of smaller, safer reactors that are even tighter than the oppressive rules that have stymied nuclear power for decades.

▶ Whatever Happened to the Metaverse? - Parmy Olson, Bloomberg |