💣 The Oppenheimer Fallacy: Don't treat AI like the A-Bomb

We're going to need better analogies for thinking about technological risk and reward

Quote of the Issue

“If by some miracle a prophet could describe the future exactly as it was going to take place his prediction would sound so absurd, so far-fetched that everyone would laugh him to scorn.” - Arthur C. Clarke

The Essay

💣The Oppenheimer Fallacy: Don't treat AI like the A-Bomb

Item: Geoffrey Hinton was an artificial intelligence pioneer. In 2012, Dr. Hinton and two of his graduate students at the University of Toronto created technology that became the intellectual foundation for the A.I. systems that the tech industry’s biggest companies believe is a key to their future. On Monday, however, he officially joined a growing chorus of critics who say those companies are racing toward danger with their aggressive campaign to create products based on generative artificial intelligence, the technology that powers popular chatbots like ChatGPT. Dr. Hinton said he has quit his job at Google, where he has worked for more than decade and became one of the most respected voices in the field, so he can freely speak out about the risks of A.I. A part of him, he said, now regrets his life’s work. - The New York Times, 05.02.2023.

The story of scientists and technologists expressing concern about how the rest of us might put their discoveries and inventions to use — or even expressing regret or remorse for their work — is hardly new or novel. This might especially be true with the history of atomic weapons. For example: Although he was not a Manhattan Project scientist, Albert Einstein later regretted his letter to President Franklin D. Roosevelt urging the United States to support research by American physicists into producing a nuclear fission reaction. (If only he had known that Nazi Germany was far from developing a bomb.)

And what about the “American Prometheus” himself, Robert Oppenheimer, the physicist and director of the Los Alamos Laboratory that designed the atomic bombs that exploded at Alamogordo, New Mexico (Gadget), and over the Japanese cities of Hiroshima (Little Boy) and Nagasaki (Fat Man)? He gets a mention in that New York Times piece on Geoffrey Hinton. When the former Google AI scientist would be asked how he could work on such potentially dangerous technology, he would paraphrase Oppenheimer “When you see something that is technically sweet, you go ahead and do it.” (The kicker to the piece by NYT reporter Cade Metz: Hinton “does not say that anymore.”)

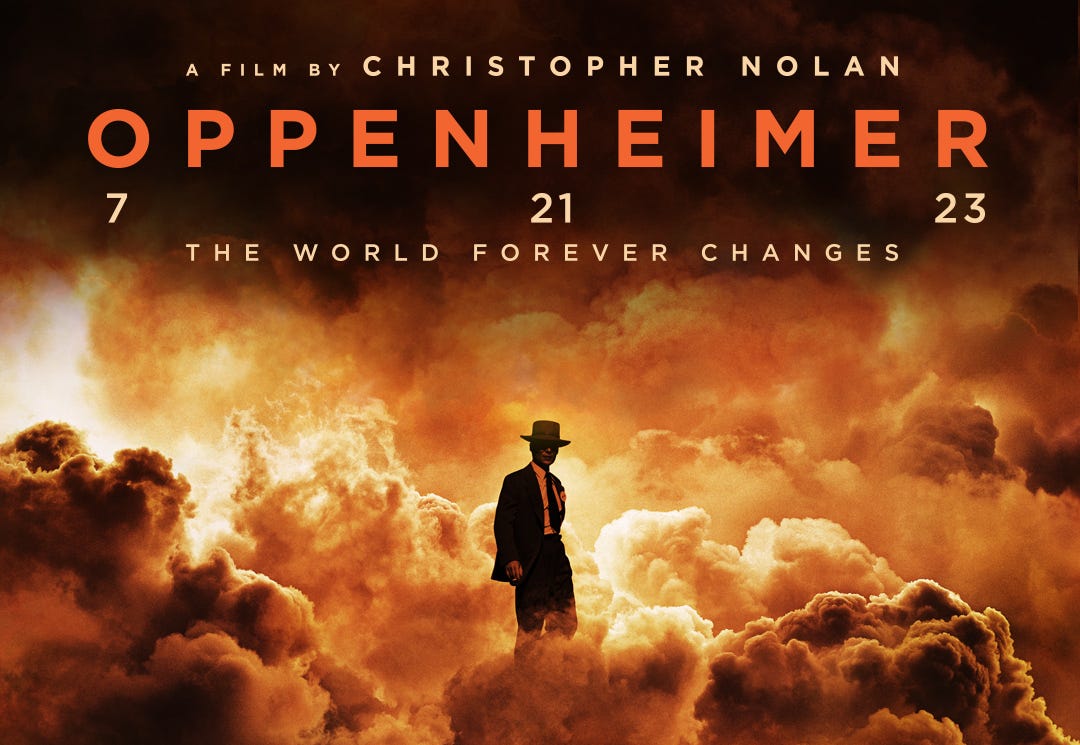

Now as you might have heard, there’s an Oppenheimer biopic coming out in July, directed by Christopher Nolan and starring Cillian Murphy in the title role. (The advertising for the film is the lead image of this issue of the newsletter.) About two things concerning Oppenheimer, I am reasonably confident: First, it will be yet another outstanding piece of cinema by Nolan. Second, the subject of the film (America/humanity creating a powerful technology that could prove its undoing) and the timing of the film (premiering during a growing public debate about the rewards and risks of a powerful new technology) means Oppenheimer will almost be certainly used a way of framing the conversation about AI, at least for a time.

Keep reading with a 7-day free trial

Subscribe to Faster, Please! to keep reading this post and get 7 days of free access to the full post archives.