🤖 Sam Altman and OpenAI: a fork in the road of human destiny?

Will this corporate techno-drama have a huge long-run impact?

Quote of the Issue

“I would, however, argue that you need to know about the Falcon 1 and all that went into it because that machine … quite likely changed the course of human history.” - Ashlee Vance, When the Heavens Went on Sale: The Misfits and Geniuses Racing to Put Space Within Reach

I have a new book out: The Conservative Futurist: How To Create the Sci-Fi World We Were Promised is currently available pretty much everywhere. I’m very excited about it! Let’s gooooo! ⏩🆙↗⤴📈

The Essay

🤖 Sam Altman and OpenAI: a fork in the road of human destiny?

If you’re a regular reader of Faster, Please!, then you probably had a pretty newsy weekend between the latest SpaceX launch of its Starship rocket and the wild roller-coaster involving OpenAI and its now-departed (for now) CEO Sam Altman. Two stories involving two important technologies (AI and reusable rockets) and two of the planet’s highest-profile corporate leaders (Altman and Elon Musk.) Still, two very different stories” one involved corporate machinations over the future of an important technology, the other a test of an important technology.

I knew I wanted to write about both but was unsure how to connect them in an authentic and meaningful way. Then I saw this tweet (yeah, that’s right, “tweet”) from Elon Musk:

Musk’s assertion assumes, of course, continued progress. While the launch on Saturday had some important key positives (the redesigned and rebuilt launch pad, a full burn by the Super Heavy booster’s 33 Raptor engines, the “hot staging” maneuver), both the booster and Starship were lost. And even accounting for SpaceX’s iterative design model — fail fast, fail cheap — there’s still lots of work to do before Starship is a fully reusable launch system that can take humans and cargo to orbit, the Moon, and beyond. As Eric Berger of Ars Technica writes:

Beyond simply getting Starship to space, it must become an orbital vehicle, and both the booster and spacecraft must be made to reliably land. Then SpaceX must learn how to rapidly refurbish the vehicles (which seems possible, given that the company has now landed a remarkable 230 Falcon 9 rockets). The company must also demonstrate and master the challenge of transferring and storing propellant in orbit, so that Starship can be refueled for lunar and Mars missions. Starship must also show that it can light its Raptor engines reliably, on the surface of the Moon in the vacuum of space, far from ground systems on Earth.

If all that happens, then Musk is correct that his space company is responsible for a key civilizational inflection point. Perhaps the critical moment, however, was the successful Sept. 28, 2008, launch of a Falcon 1 rocket after three failures by the cash-strapped company. As Walter Isaacson writes in his biography of Musk, “Unless this fourth launch attempt succeeded, it would be the end of SpaceX and probably of the wacky notion that space pioneering could be led by private entrepreneurs.” But the launch was successful. A pro-progress, Up Wing fork in the road.

Not all forks lead to a better tomorrow, however. Take the case of Project Independence, President Nixon’s response to the early 1970s oil crisis. Invoking both the Project Apollo program and the Manhattan Project, Nixon pledged that by 1980 the United States would “meet America's energy needs from America's own energy resources.” One aspect of this effort would be accelerated licensing of nuclear power plants, helping meet the Atomic Energy Commission forecast of 1,000 nuclear power plants across the country by the year 2000. But it was not to be. As economist Peter Z. Grossman has explained it:

Nixon’s project never went anywhere, because he was never clear not only about what it would cost or how it could be achieved, but even about what, exactly, “energy independence” meant: Would it apply to all energy, or really just oil? Would it mean having only the potential to meet our own needs, as he suggested initially, or actually doing so, as he claimed a few months later?

Also not helping: the end to the oil shocks, Watergate, the rising cost of building nuclear power plants, and the public’s growing concern about the safety of nuclear energy. The Atomic Age dreamt of in the immediate postwar decades fizzled. Today, America has 54 nuclear power plants supplying a fifth of electricity production. A fork of a different sort, then.

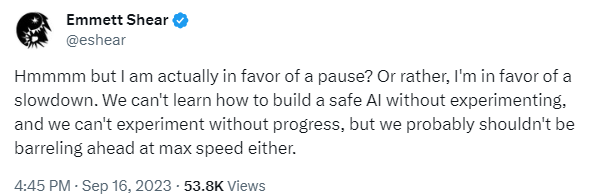

Which leads us to the drama surrounding OpenAI. Did we just see another fork in the road of human destiny, another one in the wrong direction? After all, it seems that the world’s leading AI researcher booted CEO Altman for pushing generative AI technology faster than the board wanted, then eventually hired an interim CEO, Emmett Shear of the popular videogame-livestreaming service Twitch, who is all-in-favor of dialing things back a fair bit:

Seems bad, at least if you want to accelerate GenAI progress, potentially leading to artificial general intelligence — a machine able to do all the cognitive things that people can do, if not more — as soon as possible. Again, not the sort of fork most Faster, Please! readers would want.

But I’m optimistic that we’ll roughly stay on that path we’ve been on since ChatGPT was released in November of last year. The chatbot is out of the bag. Too much at stake — from corporate profits to national economic power to national security — for problems at any one entity to derail the Age of AI. If anything, the OpenAI episode puts in stark relief the risks posed by “AI doomers” of the sort that ousted Altman. From The Economist:

Many in the doomer camp are influenced by “effective altruism”, a movement that is concerned by the possibility of AI wiping out all of humanity. The worriers include Dario Amodei, who left OpenAI to start up Anthropic, another model-maker. Other big tech firms, including Microsoft, are also among those worried about AI safety, but not as doomery.

Boomers espouse a worldview called “effective accelerationism” which counters that not only should the development of AI be allowed to proceed unhindered, it should be speeded up. Leading the charge is Marc Andreessen, co-founder of Andreessen Horowitz, a venture-capital firm. Other AI boffins appear to sympathise with the cause. Meta’s Yann LeCun and Andrew Ng and a slew of startups including Hugging Face and Mistral AI have argued for less restrictive regulation.

The doomers grabbed the early lead — as evidenced by President Biden’s executive order on AI — but the boomers seem to be, appropriately enough, accelerating. At worst, Altman will be running a well-financed and well-staffed independent AI group at Microsoft … if not starting his own company … if not returning to OpenAI under more accelerationist terms. So all in all, it looks like we had a pretty good Up Wing weekend.\

Micro Reads

▶ Entrepreneurship, Growth and Productivity with Bubbles - Lise Clain-Chamosset-Yvard, Xavier Raurich, and Thomas Seegmuller, SSRN |

▶ To Supercharge Science, First Experiment with How It Is Funded - The Economist |

▶ Writer Kim Stanley Robinson: ‘If the World Fails, Business Fails’ - Financial Times |

▶ Are Americans Falling Out of Love with EVs? - Stephen Wilmot, WSJ |

▶ Cruise CEO Vogt Resigns at GM’s Troubled Self-Driving Car Unit - Edward Ludlow, Bloomberg |

▶ OpenAI’s Front Men Break Up the Band - Ashlee Vance, Bloomberg |

▶ China’s Rise is Reversing - Financial Times |

▶ How to Keep the Lid on the Pandora’s Box of Open AI - Financial Times |

▶ “It’s Not like Jarvis, but It’s Pretty Close!” — Examining ChatGPT’s Usage among Undergraduate Students in Computer Science - Ishika Joshi, et al., arXiv |

▶ Artificial Intelligence and the Skill Premium - David E. Bloom, Klaus Prettner, Jamel Saadaoui, Mario Veruete, SSRN |

The article seems to be overeager in creating ideological divides where there really aren't any. Everyone mentioned is genuinely working towards OpenAI's mission, which is "to build safe AI, and ensure AI's benefits are as widely and evenly distributed as possible." Tagging them as doomers or accelerationists is a bit of a stretch. They're just people with different approaches to the same goal. And here's the irony - Elon Musk, hailed in the article for his 'up-wing' accomplishments, actually comes closest to the 'doomer'. Elon quit Open AI and has argued for a more cautious stance on AI's pace of development that that taken by Sam and the Open AI board.