How to get the AI-powered US economy that we want

Also: Coming to Arctic tundra near you: The return of the woolly mammoth (maybe)

“How can we sit in the light of discovery and not act?” - “John Hammond,” Jurassic Park

The Micro Reads: Nuclear fusion, carbon capture, housing, robot-resistant jobs, and much more . . .

The Short Read: The return of the woolly mammoth (maybe)

The Long Read: How to get the AI-powered US economy that we want

The Micro Reads

🏘 The housing theory of everything - Sam Bowman, John Myers, Ben Southwood - Works in Progress | At the very least, a theory that explains a lot. Everything from slow productivity growth to weak wage growth to higher inequality. Of course, while “build, baby, build” is an elegant — or at least straightforward — fix for these problems, it’s also a devilishly difficult political challenge.

🌫 The world’s biggest plant to capture CO2 from the air just opened in Iceland - The Washington Post | From the piece: “At the moment, the costs are high: about $600 to $800 per metric ton of carbon dioxide . . . far from the levels around $100 to $150 per ton that are necessary to turn a profit without the help of any government subsidies. The costs reflect both the hand-hewn nature of the technology — Climeworks’ installations are mostly built by hand for now, not through automation — and also the large amounts of energy needed to power the CO2 capture process.”

⚛ Fusion breakthrough dawns a new era for US energy and industry - Paul Dabbar, The Hill| The most striking line in this op-ed — written by a former undersecretary for science at the US Energy Department who’s now a visiting scholar at Columbia University’s Center on Global Energy Policy — is this: “Everyone should absorb that the fusion age is upon us.” Which is outstanding. But given the policy thrust of this Substack, let me also quote this:

Congress has significantly increased support for fusion in the last few years and the U.S. fusion community has significantly increased efforts — including broad increases at the Department of Energy to help achieve fusion power plants and the community’s development of the first long range fusion plan. . . . [Now it] should look at fully funding certain authorized fusion efforts. Efforts should also be accelerated beyond those levels in certain areas, including increased support for demonstration plants, designing and building power-production portions of a fusion power plant, and further materials testing efforts for the fusion containment.

☎ Automation and the Future of Young Workers: Evidence from Telephone Operation in the Early 20th Century - James Feigenbaum and Daniel P. Gross, NBER | Although most of these jobs disappeared, the result wasn’t a loss of opportunity for the next generation of female workers. The decline in the demand for operators was offset by growth in middle-skill work like secretarial jobs, as well as lower-skill work in service industries. (Incumbent operators were more likely to end up in lower-paying jobs or leave the workforce.) And there’s no reason that dynamic can’t be repeated today. There is an automation-resistant “new middle” emering, including jobs such as sales representatives, heating and air conditioning mechanics and installers, computer support specialists, and marriage and family counselors.

📱 Mobile Broadband Internet, Poverty and Labor Outcomes in Tanzania - Institute of Labor Economics | Good things happen when poor people are connected to the internet. In this case, 3G access had “a large positive effect on total household consumption and poverty reduction, driven by positive impacts on labor market outcomes. Working age individuals living in areas covered by mobile internet witnessed an increase in labor force participation, wage employment, and non-farm self-employment, and a decline in farm employment.”

The Short Read

🐘 The return of the woolly mammoths

Talk about a New Roaring Twenties. Surely many New York Times readers were surprised to learn this week that legitimate scientists, led by Harvard University biologist George Church, are trying to bring back the woolly mammoth. With $15 million of private funding, Church and his research team have started a company, Colossal, to “support research in Dr. Church’s lab and carry out experiments in labs of their own in Boston and Dallas,” according to the newspaper.

While Colossal investors might hope the startup’s mammoth revivification experiments will lead to new forms of profitable genetic engineering and reproductive technology — in addition to DNA tinkering to effectively turn today’s Asian elephants into their hairier and fattier extinct relative, the modified pachyderm embryos would be grown in an artificial womb — Church has stressed the project’s environmental goals. Reinserting neo-mammoths into the Siberian tundra might allow them to engineer that ecosystem as they once did. By breaking up moss and helping restore the region’s grasslands, its permafrost would be less likely to thaw, preventing a decomposition process releasing lots of climate-warming carbon dioxide and methane into the air.

Although I was aware of Church’s effort, I will concede that the NYT piece made me more aware of just how technically difficult a task this is. Yet as an approach to tackling climate change, it seems more realistic and doable than efforts requiring massive changes in rich nation lifestyles and keeping billions of humans well below those lifestyles permanently. Now I realize how distasteful a for-profit, tech enterprise like Colossal must seem to those traditional environments who eschew what they dismiss as technological “fixes.” After all, it was technological progress and economic growth driven by market-capitalism that created the climate change problem. Again, one of my favorite insights from economist Joel Mokyr:

Whenever a technological solution is found for some human need, it creates a new problem. As Edward Tenner put it, technology ‘bites back’. The new technique then needs a further ‘technological fix’, but that one in turn creates another problem, and so on. The notion that invention definitely ‘solves’ a human need, allowing us to move to pick the next piece of fruit on the tree is simply misleading.

Maybe we should be happy that Church and his team aren’t instead creating a new Jurassic Park. That would be one bit of technological progress we wouldn’t want to bite back. Let’s hope this woolly mammoth startup is successful and turns into a unicorn.

The Long Read

🤖 How to get the AI-powered US economy that we want

Artificial intelligence, including machine learning, “introduces a fundamental uncertainty into future growth,” according to a 2014 paper by economists John G. Fernald of the San Francisco Fed and Charles I. Jones of Stanford University. For instance: What if capital in the form of software and robots becomes so advanced that it replaces labor entirely? In that case, the researchers conclude, “growth rates could explode, with incomes becoming infinite in finite time.” (Well, at least the incomes of capital owners.)

But the growth potential of an AI-powered economy looks pretty intriguing — even stopping far short of considering science-fictional advances. If AI just boosts growth over the next few decades as much as industrial robots have over the past few decades, that could still mean growth at least 20–25 percent faster than what Wall Street and Washington are currently looking for.

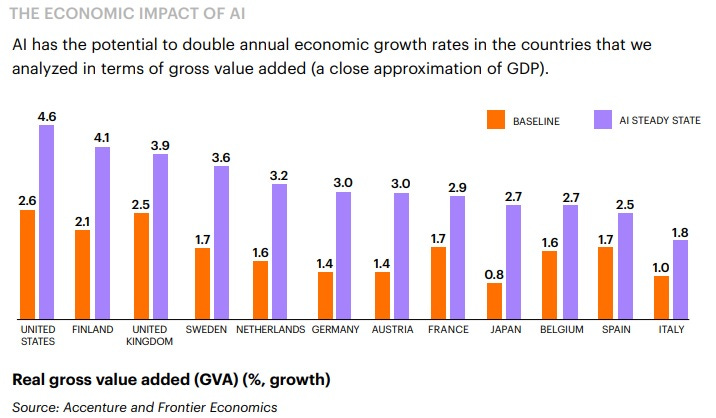

Or maybe it will be much faster. A 2016 analysis by Accenture found AI could increase the annual US growth rate from 2.6 percent to 4.6 percent by 2035, translating to an additional $8.3 trillion in gross value added. From the report: “The combinatorial effect of AI, cloud, sophisticated analytics and other technologies can propel economic growth and potentially serve as a powerful remedy for stagnant productivity and labor shortages of recent decades.”

So that’s one view of the potential GDP upside, which would be reflected in higher human welfare, such as healthier lives, a more stable climate, a space economy, even flying cars. The downside is explored at length in “Harms of AI,” a new paper by economist Daron Acemoglu, who’s been deeply analyzing the economic impact and policy implication of AI and robotics. Make that downsides, plural. Acemoglu:

I argue that if AI continues to be deployed along its current trajectory and remains unregulated, it may produce various social, economic and political harms. These include: damaging competition, consumer privacy and consumer choice; excessively automating work, fueling inequality, inefficiently pushing down wages, and failing to improve worker productivity; and damaging political discourse, democracy's most fundamental lifeblood. . . . I also suggest that these costs are not inherent to the nature of AI technologies, but are related to how they are being used and developed at the moment — to empower corporations and governments against workers and citizens. As a result, efforts to limit and reverse these costs may need to rely on regulation and policies to redirect AI research. Attempts to contain them just by promoting competition may be insufficient.

Easier recommended than executed. Sure, you could hit social media companies with more rules, such as forcing them to make consumers more aware of what data companies have, how their data are being used, and the cost-benefits of recommended products. But there “is no guarantee that such informational warnings will work for all consumers,” Acemoglu concedes, although such rules might well help prevent some abuses.

And while it would be great if more R&D focused on AI that created new tasks for humans to do rather than automating ones they currently do, “regulators might have a hard time separating AI used for creating new tasks from AI being used for automating low-skill tasks while empowering higher-skilled or managerial workers.” (Acemoglu thinks higher corporate income taxes and lower depreciation allowances would offset a pro-automation bias in the US tax code.)

Finally, implementing regulatory solutions for AI that weakens democratic politics — such as easily manipulated “echo chambers” on social media — would be hard to regulate after the fact since those in power would probably already be benefitting from such technology. While not seeing this or any other regulatory challenge as insurmountable, Acemoglu wraps up thusly:

Therefore, my conclusion is that the best way of preventing these costs is to regulate AI and redirect AI research, away from these harmful endeavors and towards areas where AI can create new tasks that increase human productivity and new products and algorithms that can empower workers and citizens. Of course, I realize that such a redirection is unlikely, and regulation of AI is probably more difficult than the regulation of many other technologies, both because of its fast-changing, pervasive nature and because of the international dimension. We also have to be careful, since history is replete with instances in which governments and powerful interest groups opposed new technologies, with disastrous consequences for economic growth. Nevertheless, the importance of these potential harms justifies the need to start having such conversations.

This is a rich, serious paper from a widely respected economist that my summary hardly does justice. That said, the notion of slowing AI progress and diffusion through a “precautionary regulatory principle,” greatly worries me, particularly when it comes to automation. It may well be the case that we risk rampant automation that contributes to anemic income growth and rising inequality rather than technological progress that accelerates productivity growth, supports employment, and generates shared prosperity.

These risks should be taken seriously. I would note that tech-optimist economist Erik Brynjolfsson has also expressed concern about too much tech progress being of the automation sort rather than the new-task-creation kind. (He has also highlighted the scarcity of highly skilled “superstars” as one factor limiting economic growth from “dramatic advances in digital technologies.”) One policy solution would be for government to “focus on increasing the number and productivity of top-percentile workers. This could be done by encouraging high-skill immigration, encouraging creative skills in education or widening access to top universities.”

And yet I would also point out that the 2021 AI Index report, which Brynjolfsson co-authored, found the biggest increase in AI business investment was in the field of drug discovery and other biological uses of AI. That sort of investment uses AI as a “super research assistant” enabling scientists to become more productive. And to be honest, I would rather deal with the challenges outlined by Acemoglu than the ones from more decades of relative stagnation.

I don't think AI should be regulated per se, but I do think there should be more scrutiny on their algorithms. The best argument I've heard against strong AI is not that it will lead to Skynet, but that it will be kind of like smoking - reinforcing maladaptive behaviors that increase human suffering.