✨⏩ AI acceleration: the solution to AI risk

Faster, please!

Last summer, former OpenAI employee Leopold Aschenbrenner published a 165-page analysis, “Situational Awareness, The Decade Ahead,” in which he argues that human-level, artificial general intelligence will likely emerge by the late 2020s. And when AGI happens, an "intelligence explosion" could rapidly follow. How? Automated AI researchers could accelerate progress dramatically, potentially leading to superintelligent systems — artificial super intelligence, or ASI — not long after.

Nothing really new here, as amazing as this possibility sounds. It’s a Singularity scenario that AI optimists frequently put forward, though Aschenbrenner’s work history infuses it with added credibility. Yet what really gave the monograph online virality, I think, was Aschenbrenner's focus on the national security implications of such a historic computational event: Whoever achieves superintelligence first could gain decisive military and economic advantages. (Former Google CEO Eric Schmidt has also made this case.)

So it’s concerning, in Aschenbrenner’s view, that current AI lab security is dangerously inadequate, potentially allowing China to steal critical research. This claim leads to his prediction that by 2027-2028, the US government will necessarily take control of AGI development through a Manhattan Project-style initiative. The stakes will be too high to leave AGI to private companies. Aschenbrenner writes:

As the race to AGI intensifies, the national security state will get involved. The USG will wake from its slumber, and by 27/28 we'll get some form of government AGI project . . . I find it an insane proposition that the US government will let a random SF startup develop superintelligence. Imagine if we had developed atomic bombs by letting Uber just improvise.

Indeed, Achenbrenner concludes his analysis with the following quote by James Chadwich, physics Nobel Laureate and author of a 1941 British government white paper on the potential of an atomic weapon:

I remember the spring of 1941 to this day. I realized then that a nuclear bomb was not only possible — it was inevitable. Sooner or later these ideas could not be peculiar to us. Everybody would think about them before long, and some country would put them into action. . . And there was nobody to talk to about it, I had many sleepless nights. But I did realize how very very serious it could be. And I had then to start taking sleeping pills. It was the only remedy, I’ve never stopped since then. It’s 28 years, and I don’t think I’ve missed a single night in all those 28 years.

The race to superintelligence: promise and peril

A key point: Although Aschenbrenner distances himself from "doomers," he also acknowledges several existential risks from super intelligence. The primary threat isn't misaligned AI itself — AI systems that can't be reliably controlled or trusted to follow human instructions and values — but rather that super intelligence will enable cheap and widespread access to novel weapons and means of mass destruction. And while he believes AI alignment problems are solvable, he warns that racing through that intelligence explosion due to international competition creates extreme risks. He also emphasizes the existential threat of authoritarian control, particularly if China gains superintelligence first.

(That last point is also a scenario addressed by existential-risk researcher Toby Ord in his 2021 book, The Precipice — a “world in chains” where a global totalitarian regime maintains absolute control through surveillance and indoctrination, making resistance impossible. With no internal or external threats, this permanent oppression effectively ends human potential, similar to extinction. So, like George Orwell’s Nineteen Eighty-Four, but worse and neverending.)

Modeling the paradox: Why faster may be safer

Given all the above, I found it interesting that Ashenbrenner recently collaborated on a paper that conducts a deeper dive into his concerns and attempts to model them. In last month’s paper “Existential Risk and Growth,” Aschenbrenner, along with Philip Trammell of Stanford University's Digital Economy Lab, explores whether speeding up technological progress increases or decreases the risk of human extinction.

Their finding: New technologies can be dangerous, but going faster is often safer in the long run. First, faster progress means we spend less time in risky phases of development. Think of it like quickly traveling through a dangerous area rather than lingering there. Or: If we climb the ladder slowly, we spend more time on each dangerous rung. (“If you're going through hell, keep going,” as Winston Churchill once said.) Early nuclear weapons, for instance, were more dangerous than today's because they had fewer safety protocols and controls. More to the point: If developing AI safely will take 10 steps, moving through those steps in five years rather than 20 years means less total time exposed to AI risks.

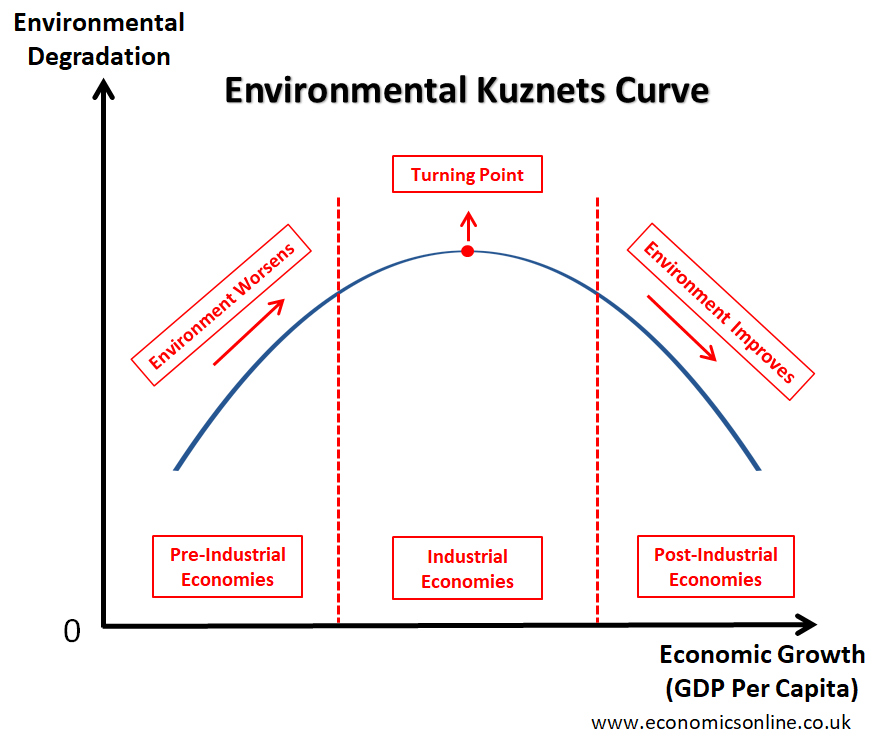

Second, as societies get richer through technological progress, they can afford to spend more on safety measures. Early on, societies might accept risks because they need growth, like accepting increased pollution during industrialization. But as countries grow wealthier, they invest more in safety measures and regulations (sometimes too much, as readers of this newsletter know) creating an "existential risk Kuznets curve." Like the original Kuznets curve for environmental harm, existential risks first rise as dangerous technologies are adopted, then fall as wealthy societies prioritize safety over additional growth.

From the paper:

Technology brings prosperity. On the other hand, some technological developments have arguably raised, or would raise, existential risk: the risk of human extinction or of an equally complete and permanent loss of human welfare. This raises a possible tradeoff: concern for the survival of civilization may motivate slowing development. Environmentalist sentiments along these lines go back at least to the Club of Rome’s 1972 report on the “Limits to Growth”, and have arguably reemerged with calls to pause AI development. Even if some technological developments directly raise existential risk, however, others may directly lower it. Advances in game theory may render us less vulnerable to nuclear war; vaccines render us less vulnerable to plagues. The prosperity technology brings can also lower existential risk indirectly, by increasing a planner’s willingness to pay for safety. This paper offers an argument that these salutary possibilities probably dominate in the long run, and that the proposed tradeoff is thus typically illusory. That is, concern for long-term survival should typically motivate speeding rather than slowing technological development. Rather than trying to slow down progress, we should focus on managing risks and implementing good safety measures as we advance.

(I should note a different take on this from Stanford University economist Chad Jones, as outlined in his paper, “The AI Dilemma: Growth versus Existential Risk.” He finds that if we care a lot about risk (which most people do), then we should be very cautious about pushing AI forward just for economic benefits. Even infinite wealth might not justify risking extinction. But Jones identifies one important exception: If AI could help humans live longer, we might accept more risk since the benefit directly counters the downside of extinction. How much risk we should accept depends heavily on how we value potential gains versus catastrophic losses.)

Aschenbrenner and Trammell are offering their version of a major point found in my 2023 book: Tech progress and economic growth create wealthy, capable societies able to tackle unforeseen challenges. The greatest risk is in not taking any risks at all. As political scientist Aaron Wildavsky argued, "If you can do nothing without knowing first how it will turn out, you cannot do anything at all." Instead, building societal resilience through continued innovation and development enables flexible responses to new threats. I write: “Rich, technologically advanced nations have options. Assuming adequate governance, they can deploy vast resources and know-how to solve problems as they happen. They don’t need perfect foresight and planning, which is impossible anyway.”

From theory to action: A blueprint for AI governance

If you accept the core argument here — that broad-based technological deceleration may be counterproductive for managing existential risk compared to targeted regulation — what sort of regulation are we talking about? In his long essay, Aschenbrenner concludes with several ASAP policy ideas:

Upgrade in AI lab security to prevent China from stealing key breakthroughs.

Ensuring massive AI compute infrastructure is built in the United States rather than abroad, which requires streamlining regulations and rapidly scaling up power production.

Create a government-industry partnership for AGI development that maintains private-sector innovation while establishing proper oversight and security protocols. This would involve joint ventures between major AI labs, cloud providers, and government agencies.

Build international coalitions with democratic allies for AGI development while creating a nonproliferation regime for the technology.

As it turns out, there’s plenty of broad overlap between those recommendations and a new paper from OpenAI, “AI in America: OpenAI's Economic Blueprint.” Both favor some federal coordination of AI development through public-private partnerships, massive infrastructure investments (especially in compute and energy), development of common security and safety standards, controlled sharing of AI capabilities with democratic allies (while restricting access to adversaries), and the need to accelerate permitting and deployment of AI infrastructure. Both frame their AI approach as a critical national mobilization effort where government plays a key coordinating role while preserving private-sector innovation.

As policymakers think about AI regulation, they need to think hard about the overarching point: Slowing AI progress may be the biggest risk of all.

On sale everywhere The Conservative Futurist: How To Create the Sci-Fi World We Were Promised

Micro Reads

▶ Economics

Britain should stop pretending it wants more economic growth - FT Opinion

Is Javier Milei’s economic gamble working? - The Economist

▶ Business

▶ Policy/Politics

Newsom Has a Permitting Epiphany - WSJ Opinion

Falling birth rates raise prospect of sharp decline in living standards - FT

▶ AI/Digital

Asimov's Laws of Robotics Need an Update for AI - IEEE Spectrum

▶ Biotech/Health

Startup Raises $200 Million to Bring Back the Woolly Mammoth - Bberg

US Will Ban Cancer-Linked Red Dye No. 3 in Cereal And Other Foods - Bberg

▶ Clean Energy/Climate

The planetary fix - Aeon

Trump nominee Chris Wright to lay out top 3 goals as energy secretary - Axios

▶ Space/Transportation

▶ Substacks/Newsletters

Should economists read Marx? - Noahpinion

Crushing the NIMBYs of Middlemarch - Slow Boring

Washington Must Prioritize Mineral Supply Results Over Political Point Scoring - Breakthrough Journal

California Has Its Own Four Horsemen of the Apocalypse - Virginia’s Newsletter