💡 5 Quick Questions for … MIT research scientist Tamay Besiroglu on the huge economic potential of AI

"AI productivity effects will dominate the productivity effects that computers had in the late 20th century."

Are you bullish about the next 25 years of the American economy? I mean, really bullish. Look, I’m not talking about slow-but-steady growth that modestly outperforms, say, the median Federal Reserve forecast of 1.8 percent, inflation adjusted.

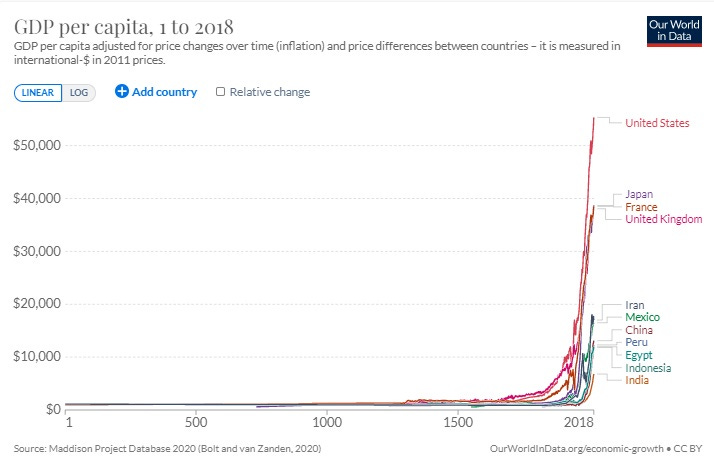

Consider, instead, an economy that grows 50 percent faster. Or how about twice as fast? That may sound crazy, but it would only mean the economy was growing as fast as it did on average over the second half of the 20th century. Let me put it another way: I would love for a productivity-led boom of such strength that economists and technologists would start to wonder if the exponential-growth Singularity was nigh. This is the sort of thing that happened during the 1990s.

For any scenario even remotely like those to happen, we’re going to need much faster productivity growth. Remember, those past years of fast growth were helped along by robust labor force growth. But thanks to Baby Boomer retirements and a lower fertility rate, the labor force is now more of a dampening factor on the economy’s potential growth rate. As such, productivity will need to do the heavy lifting.

And to be bullish on productivity growth is to be bullish on AI. Here’s what that means: AI would need to boost worker productivity across a broad swath of business sectors. It would need to be a “general-purpose technology,” or GPT, much like factory electrification in the 1920s. Now it’s not hard to imagine a wide variety of sectors affected by AI, everything from retail product recommendations to customer service chatbots to business analytics for better decision making.

But what really gets me excited is that AI isn’t just potentially an immensely powerful GPT but also an IMI, an invention of a method of invention. “IMIs raise productivity in the production of ideas, while GPTs raise productivity in the production of goods and services,” writes University of Warwick economist Nicholas Crafts in the 2021 paper “Artificial intelligence as a general-purpose technology: an historical perspective.” AI could be an “antidote,” as Crafts puts it, to the finding that big ideas are becoming harder to find.

For more on this subject, I emailed some relevant questions to Tamay Besiroglu, a visiting research scientist at MIT’s Computer Science and Artificial Intelligence Laboratory, where his work focuses on the economics of computing and big-picture trends in machine learning. He also recently did this, which is what led me to him:

1/ How optimistic are you that AI will deliver significant productivity gains in the 2020s?

I think that there is only a modest chance—say, around 25 percent—that by the end of this decade, AI will significantly boost aggregate US productivity growth (by “significantly,” I have in mind something like reverting to the 2 percent productivity growth rate that we observed before the productivity slowdown that occurred in the early 2000s).

I’m not more optimistic because boosting aggregate productivity is a tall order. In the past, few technologies—even powerful, general-purpose, and widely adopted ones like the computer—have had much of an effect. Deep learning has been applied with some success to a few problems faced by large tech companies (such as facial recognition, image detection, recommendations, and translation, among others). However, this has benefited only a small sliver of the overall economy (IT produces around 3 percent of US GDP). It also does not seem likely that AI has enhanced the productivity of technology companies by a large enough margin to produce economy-level productivity effects.

Over longer timescales—say, 15 or 30 years—I think there are good reasons to expect that conservative extensions of current deep learning techniques will be generally useful and reliable enough to automate a range of tasks in sectors beyond IT; notably in manufacturing, energy, and science and engineering. Concretely, I think it is more likely than not that over such a time frame AI productivity effects will dominate the productivity effects that computers had in the late 20th century.

Given the importance of technological progress for driving economic growth among frontier economies, I pay particular attention to the use of AI tools for automating key tasks in science and engineering, such as drug discovery, software engineering, the designing of chips, and so on. The widespread augmentation of R&D with AI could enable us to improve the productivity of scientists and engineers. Automating relevant tasks will also enable us to scale up aggregate R&D efforts (as computer hardware and software for AI are much easier to scale up than it is to increase the number of human scientists and engineers). I think it’s possible that by the middle of this century, the widespread augmentation of R&D with AI could increase productivity growth rates by 5-fold or more

Keep reading with a 7-day free trial

Subscribe to Faster, Please! to keep reading this post and get 7 days of free access to the full post archives.